DeepSpeech 0.9 - mature, polished, fast, ubiquitous

DeepSpeech is an open-source speech-to-text (STT) engine powered by deep learning. DeepSpeech offers high quality, offline, low latency speech-to-text technology that's simple to integrate into your applications, thanks to our easy-to-use API, which supports multiple architectures, platforms, and programming languages. It leverages TensorFlow Lite for fast and compact inference on low power platforms. DeepSpeech is the result of the hard work of a small team of brilliant people I've had the pleasure to work with over the past few years, and a large group of interested developers, users and organizations.

After volunteering to write the first article documenting our work on the project, I unofficially inherited the responsibility of writing about our progress (in typical open source fashion, good deeds are punishedrewarded with ownership). I did so in two subsequent articles for Mozilla Hacks. These past articles have focused strongly on technical aspects and recognizing the work of our amazing external contributors. This article will also cover technical details and contributions, but this time I would like to start by talking about my colleagues and former colleagues and some of their contributions that were instrumental in building DeepSpeech.

The folks who made it possible

Alexandre Lissy figured out the (at the time scarcely documented) TensorFlow Lite API and internals to integrate it into our native client, including adding cross-compilation support for ARM platforms, to enable DeepSpeech to work on Android, Raspberry Pi, and eventually iOS. He also built our extensive continuous integration setup, leveraging Mozilla's TaskCluster project and getting us CI coverage on all our supported platforms and configurations. Together with other French speaking members of our community, Alex coordinated community efforts on building French models for DeepSpeech.

Chris Lord reverse engineered the TensorFlow build system to figure out a clever way to integrate it into our C++ inference library, back when both TensorFlow and Bazel were younger, less mature, and less documented projects. Chris also reimplemented the Python feature computation library we used in C, getting the first version of our inference API up and running from feature computation all the way to presenting results.

Kelly Davis lead the team with attention and care from the beginning. I'm deeply grateful for his leadership and mentoring, and was always inspired by how hard working and professional he's always been. Kelly wrote the initial replication of the Deep Speech paper from Baidu. He connected our work and our team with interested companies, research groups and governments all around the world, multiplying our reach and impact. He secured grants that enabled the team to work on new, impactful projects such as the Bergamot browser machine translation project.

Tilman Kamp built a cluster management and job scheduler system for our in-house GPU cluster, enabling everyone on the team to run experiments without stepping on each other's feet. Tilman also optimized the training data input pipeline for our cluster; found, imported and cleaned up several English and German datasets for experiments; overhauled our data augmentation code and added several augmentation passes; and built an automatic speech aligner with DeepSpeech called DSAlign, which was used to align tens of thousands of hours of speech data.

DeepSpeech 0.9

We have continuously worked to make our speech-to-text engine faster, more capable, and easier to use. Over time our architecture has changed dramatically, driven by research progress and invaluable feedback from early adopters. These improvements have led to growing adoption by developers, who rely on our technology to build voice-powered applications for their users around the world (and maybe even on the moon!).

Today DeepSpeech 0.9 is available for download from our GitHub release page under the Mozilla Public License. It is capable of performing speech-to-text in real-time on devices ranging from a Raspberry Pi 4 to server-grade hardware with GPUs. Bindings are available for Python, Node.JS, Electron, Java/Android, C#/.NET, Swift/iOS, Rust, Go, and more. Pre-trained models are also available for English and an experimental Mandarin Chinese model.

In this post, I’ll talk about how we got here, and what has changed since my last article. I'll cover major performance improvements for mobile devices, experimental iOS support, new model training capabilities to more easily create use-case specific models with your own data, and a new experimental Chinese model.

Our collaborative journey to production readiness

DeepSpeech started as our implementation of a speech-to-text architecture proposed in a series of academic papers from Baidu. Our goal was to implement an open source STT engine that could scale to many languages and that was easy to use by application developers, without requiring deep learning expertise. We released early, often, and in the open. This enabled us to incorporate valuable learnings from production deployments, shaping our project for today’s 0.9 release.

Previously, I’ve talked about our initial efforts towards reaching high accuracy transcriptions in our engine. That work proved that the foundations of our technology were solid. It also set the path from research exploration to production-ready software: optimization, API design, model packaging, easy-to-use language bindings, high quality documentation, and more. In particular, one of our first challenges was to improve latency for real-world use cases, which required rebuilding our architecture to support real-time streaming capabilities. That means doing work in small chunks, as soon as the input data is available. I wrote about some of the technical details involved on Mozilla Hacks.

As each part of this work fell into place we started to see the exciting results. So did developers interested in speech recognition and voice interactions. A community of early adopters formed, and they helped us identify gaps, and focus on the features that have the most impact for production applications. Our contributors also participated in the Common Voice project to start building speech datasets for their own languages. In addition, we collaborated with academic researchers to use Common Voice data and DeepSpeech technology to publish the first ever automatic speech recognition results for languages like Breton, Tatar and Chuvash. Some of our contributors built entire features from scratch. This work culminated in a feature packed 0.6 release.

The work on performance didn't stop, but we additionally focused on API stability and polish, and code robustness. Since 0.6 we've done a complete review of our API endpoints, fixing inconsistencies and improving usability. We've made it easier than ever to use DeepSpeech, paving the way for seamless upgrades to future versions. Our model files now include embedded default values for all configuration parameters. We’ve designed a new packaging format for our external scorer, bundling it into a single file instead of two. We added versioning metadata to all packages. Combined, these changes mean that the vast majority of users will be able to upgrade to new models by simply replacing the model files with new ones, no code changes necessary.

Continuous improvements on performance

On the performance front, we have updated our packages to use TensorFlow 2.3, and enabled support for Ruy, a new TensorFlow Lite backend for faster CPU-backed operations. Ruy allows TensorFlow Lite to better scale to multiple CPU cores on mobile platforms. With this change, DeepSpeech now has excellent performance on even more devices. Previously, our reference platform for Android devices was the Qualcomm Snapdragon 835, a high-end system-on-a-chip (SoC) released in 2016 and used in phones like the Google Pixel 2. With version 0.9 of DeepSpeech, we now have real time performance on the Nokia 1.3, an entry level phone with significantly less CPU power, running on the Qualcomm 215 SoC. On that device we went from a Real Time Factor of 2.6 to 0.9, which means the system can keep up with audio as it is being streamed from a microphone, in real time.

In response to feedback from our users, we have also started publishing packages with TensorFlow Lite on all platforms. Despite being optimized for mobile devices, our TensorFlow Lite packages offer some interesting performance characteristics, from smaller models to better startup speed. Depending on the type of workload expected in your application, it might be better to use the TensorFlow Lite version of DeepSpeech everywhere, not just on low power devices. On desktop platforms, you can now try the TensorFlow Lite version by simply installing the “deepspeech-tflite” package instead of “deepspeech”. Packages for mobile platforms continue to use TensorFlow Lite by default.

DeepSpeech now runs on iOS

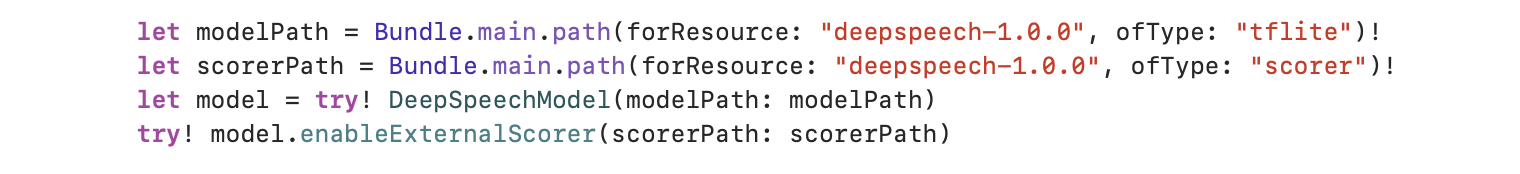

We’ve always had strong interest from developers working on mobile platforms, from Android phones to Raspberry Pis to digital kiosks. Our Java bindings for Android have been available since version 0.5.0 and have been downloaded over 11,000 times. With DeepSpeech 0.9, we are excited to add iOS support with Swift bindings. Our latest version can transcribe one second of audio in around a quarter of a second on an iPhone Xs. If you’re an iOS developer, we also have example source code with microphone streaming capabilities.You can use it to see how the Swift API works and get started on your own app.

You can try it today. Download the pre-built iOS framework and shared library from our release page and add them as dependencies in your Xcode project. We’d love to hear your feedback on the package and the bindings. Because it’s a recent addition, the Swift bindings are considered experimental and don’t yet offer API stability guarantees. We’re aiming to stabilize them in a future release.

If you're an iOS developer, we'd love to get your feedback and help in figuring out the final details to get DeepSpeech easy to use and ship in the App Store. See the contact page in the docs for how to get in touch.

New tools for creating custom models

All of the releases so far have included a pre-trained model for English, trained on thousands of hours of both openly available and licensed speech data. At the same time, the ability to create custom models is a core part of what makes DeepSpeech so powerful. It can allow applications with narrower vocabularies to achieve accuracies that general speech recognition offerings simply can’t match. One of the main challenges in creating new models from scratch is the need for large amounts of labeled data. Researchers have developed several techniques to alleviate this problem, and an important method is called transfer learning.

With transfer learning, instead of starting from scratch, you start from a baseline model that has already been trained with large amounts of data. You can think of it as taking a general model and specializing it for your data, and consequently for your use case. For example, this baseline model can be our English release model. This technique allows developers to create custom models using smaller amounts of data, and can even be used to bootstrap models for new languages.

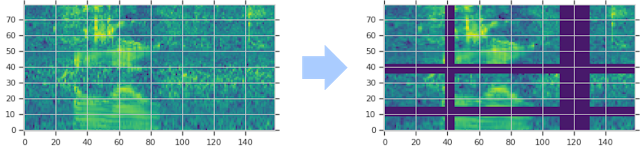

Another important technique is data augmentation, where you apply transformations to your samples while training the model in order to make the data more varied. Adding background noise, distortion and cuts, speeding the audio up, or slowing the audio down—these are examples of augmentations used for speech recognition.

In the 0.9 release we now include support for both transfer learning and data augmentation. You can combine these new features with our new optimized training data format, SampleDB, to create new models faster and make the most out of your training hardware. Check out the training documentation and join our Discourse forums to talk with other developers who are creating custom models.

New Mandarin Chinese models

Last year Rosana Ardila in collaboration with the Firefox Reality team led the creaion of a 2000 hours dataset of Mandarin Chinese transcribed speech data. This dataset enabled us to do some larger scale experiments in the challenging task of Mandarin Chinese speech recognition. Conventionally, DeepSpeech uses a fixed set of target symbols (called an alphabet in the documentation and code) for the language being trained. For Chinese, the list of symbols is huge, on the order of tens of thousands of characters. Directly modeling thousands of target symbols leads to models that are too memory intensive for low resource platforms.

Instead, we explored an idea first suggested in the Bytes are All You Need paper by Li et. al. With this approach, instead of hand picking the target symbols for each language, you train the model directly on UTF-8 encoded strings, with the model predicting byte values directly. This introduces an additional task: the model must simultaneously learn to recognize speech and to encode that recognition according to UTF-8, where a single codepoint can span up to 4 bytes. On the other hand, it means that with an "alphabet" consisting of 256 byte values, a model can cover any UTF-8 encodable language.

We're now releasing an experimental Chinese model as part of the DeepSpeech 0.9 release, trained using the technique described above, for interested developers to experiment with.

Build compelling voice applications with DeepSpeech

This technology is currently being used to create models for at least nine different languages in six different continents. That's as far as we know. Start-ups, established companies, research departments and hobbyists are using DeepSpeech to build innovative voice-driven applications.

Try DeepSpeech today by following the usage guide for your favorite programming language. The latest 0.9 English model has been trained on over 4,000 hours of speech data, and is available for download on the release page, where we also document all of the training parameters that were used to create it. You can also find the latest release in your favorite package manager: there are packages for Python, Node.JS, Electron, Java on Android, .NET on Windows and Swift on iOS. Beyond that, our community provides packages for Rust, Go, V, Nim, and more!

I would like to thank our community of incredible contributors, who have helped immensely by testing our software, filing issues, making code contributions, improving our documentation, helping other users on our discussion boards, sharing their valuable experience deploying our technology, and constantly surprising us with incredible creativity and collaborative spirit. Each and every single one of you is appreciated. Thank you all!